fuzzing the aretext markdown parser

A few weeks ago, I implemented syntax highlighting for markdown in aretext, the minimalist vim clone I’ve been building. Like most context-sensitive languages, markdown is difficult to parse. Although it handles only a subset of the CommonMark 0.30 spec,1 my implementation required 845 lines of Go code.

Parsing is especially tricky because the code needs to handle any document a user might open. It can’t crash or enter an infinite loop. I ran the markdown parser through all 652 tests from the CommonMark spec, but I still felt nervous about missing some edge case.

Fortunately, Go 1.18 introduced a framework for fuzz testing. Fuzz tests try to find bugs in a program by feeding it randomly generated inputs. This can catch bugs that other kinds of tests might miss.

The test I wrote is pretty short. Here’s an annotated version:

// This looks very similar to an ordinary Go test, except that we're using

// testing.F (for "fuzz test") instead of testing.T.

func FuzzMarkdownParseFunc(f *testing.F) {

// I had already serialized the CommonMark test cases to JSON for the unit tests,

// so I re-used them here as "seed" inputs to the fuzzer.

// The fuzzer will randomly mutate these to produce new test inputs.

testCases, err := loadCommonmarkTests()

if err != nil {

f.Fatalf("Could not load markdown test seeds: %s", err)

}

for _, tc := range testCases {

f.Add(tc.text)

}

// Instantiate a "parse func" for Markdown. This parses part

// of a Markdown document. Since it's stateless, we can reuse

// it for every test execution.

parseFunc := MarkdownParseFunc()

// Now we call `f.Fuzz` to feed the randomly generated test inputs to the parser.

f.Fuzz(func(t *testing.T, data string) {

// Instantiate a new parser. This is stateful, so create a new

// one for each test execution.

p := parser.New(parseFunc)

// Load the test data into a `text.Tree` (aretext's B+ tree data structure for text).

tree, err := text.NewTreeFromString(data)

if errors.Is(err, text.InvalidUtf8Error) {

// Skip any input that isn't valid UTF-8.

// Aretext performs the same validation before attempting to parse the document,

// so the parser assumes its input is valid UTF-8.

t.Skip()

}

require.NoError(t, err)

// Now parse the document as markdown! If this panics or takes too long,

// the fuzz test will fail.

p.ParseAll(tree)

p.TokensIntersectingRange(0, math.MaxUint64)

})

}

To start fuzzing, simply pass the -fuzz flag to go test, like this:

go test -fuzz=FuzzMarkdownParseFunc ./syntax/languages/

By default, the fuzz tests run indefinitely. How long is long enough? I couldn’t find much guidance online, so I decided to run it for at least two weeks.

I rented a 4-core Linux server from Linode. Ordinarily this costs $40/month, but I had some free credits to burn. It took maybe twenty minutes to spin up the server, install Go, clone the aretext repository, and start the fuzz test. CPU immediately spiked to 100% on all cores.

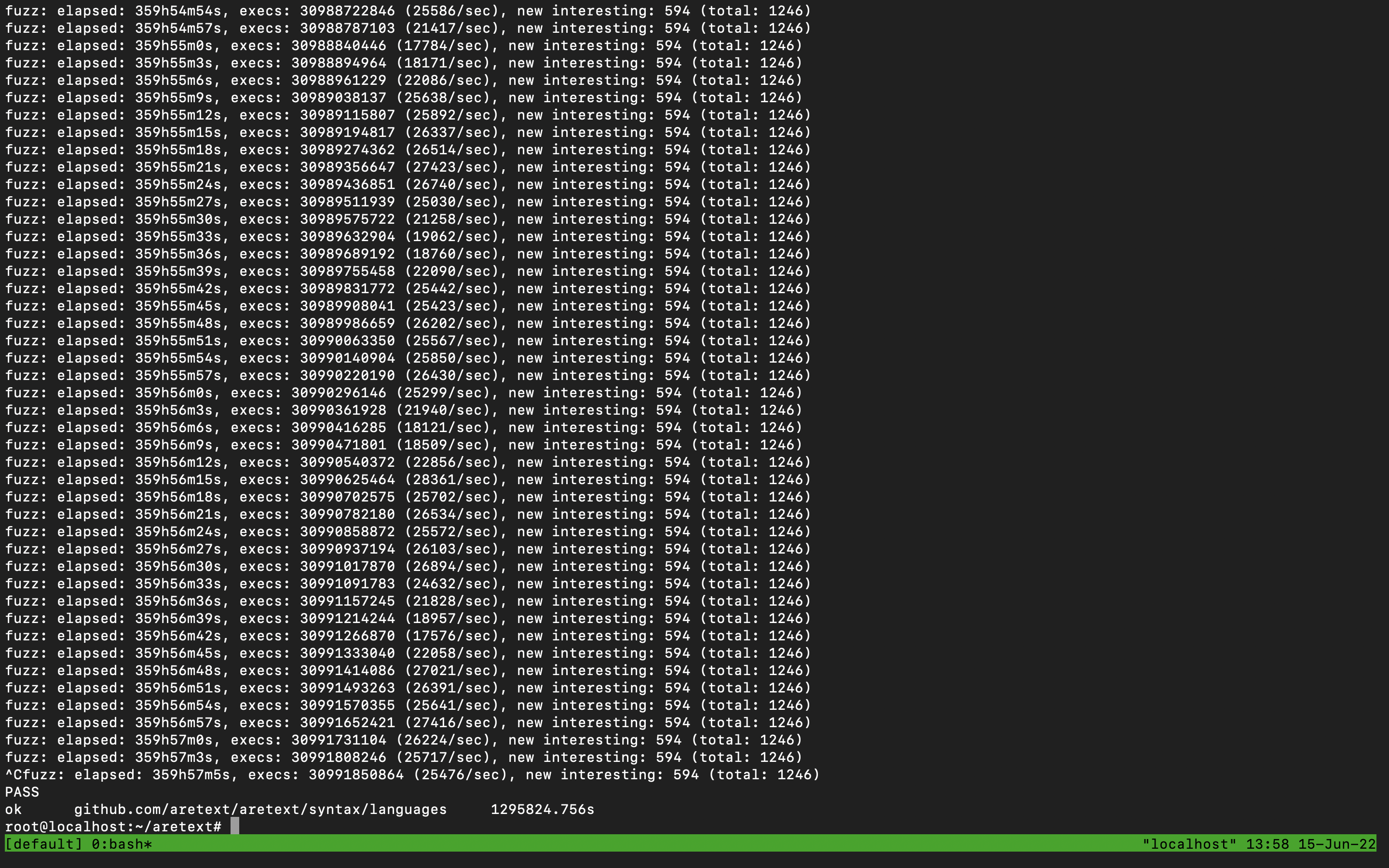

About two weeks later, I logged in and stopped the test:

- The test ran for 359h 57m 5s, which is about 15 days.

- Total cost was about $20 before applying free credits.

- The test parsed 30,991,850,864 inputs. Yes, that’s over 30 billion executions.

- Of these inputs, 594 were “new interesting,” meaning that they caused a new code path to execute.

I wish I could end this post with “and then the test discovered a critical bug,” but it didn’t. Over time, the number of “new interesting” inputs per day seemed to plateau. The last few days, the test found only a couple new inputs, so I decided to stop it. Maybe if it had continued it would have found something?

Quick aside: the CommonMark spec was written by John MacFarlane, who taught one of my favorite undergraduate Philosophy courses at UC Berkeley! ↩︎